Summary

This page will explain the architecture and some technical challenges related to https://news-haikus.codeandpastries.dev, a side project I worked on in H1 2024 to figure out how to use generative AI in an app.

Principle

The site is a wrapper around the Gemini Pro Google LLM.

In order to try and make a feature fun and interesting, I wanted to see if the LLM could generate haikus, a special kind of Japanese poem. For the site to have new content every day, I thought about generating Haikus based on today’s news titles.

The first version of the site was creating haikus automatically (cron process), but it soon became boring. In order to allow for some interactivity, I wanted to add a feature to allow users to generate their haikus on-demand by choosing a news topic and some hyper-parameters.

At some point it would be great to offer the possibility to users to see other people’s haikus and to rate them, then display the “best” haikus and see which hyper-parameters are used in the best haikus.

Architecture

Stack list

- Site coded in React with Next.js framework

- Site released on Vercel

- News downloaded from the The Guardian Open API

- Data saved in Firebase database

- Authentication/Authorization with Clerk

- LLM used Google Gemini 1.0 Pro API

- TailwindCSS for styling

Basic Haiku Generation

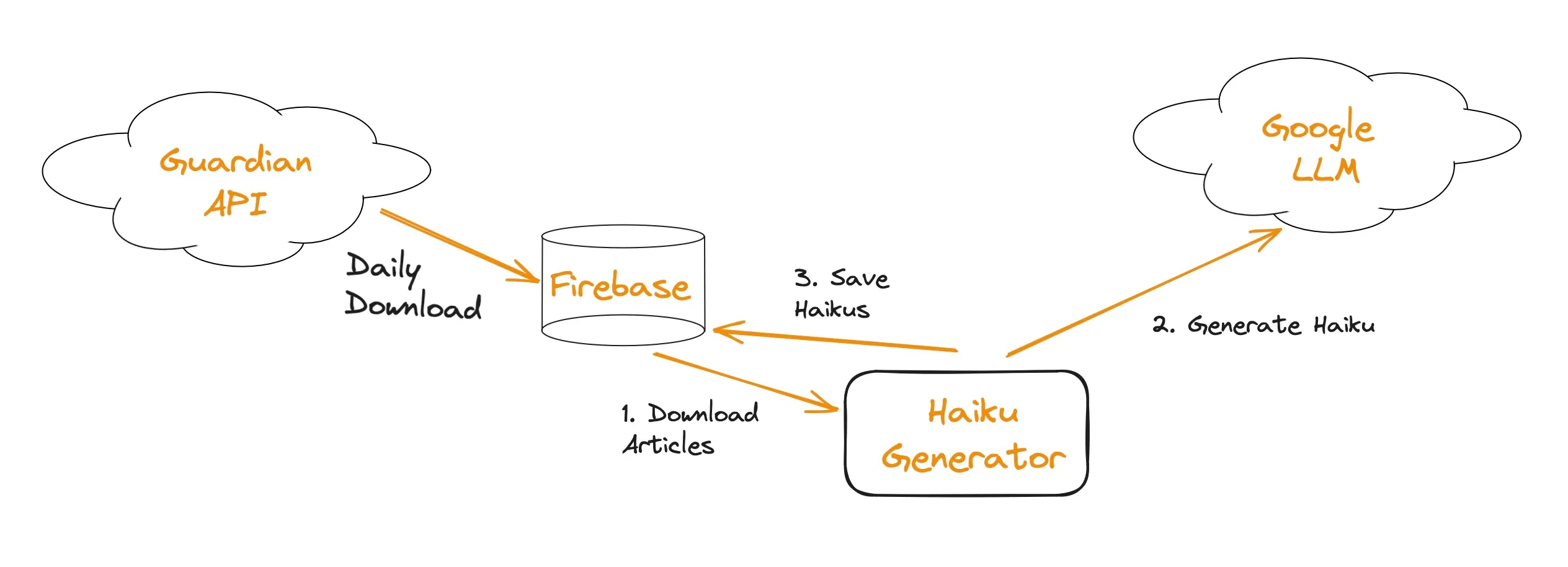

To generate the haikus, we need to:

- Download a selection of news articles

- Select some articles, insert it into an appropriate prompt and use Gemini API to generate the haikus

- Save haikus into a database to avoid re-generating them all the time

The summary is in the diagram below:

News Articles

For the news articles, I used the Guardian API. I save the articles in a database to make subsequent queries easier. I tried to use the LLM to characterize the mood of the article, in order to guess which articles would be more likely to produce interesting haikus. However the result was not much better than selecting news articles randomly and took too much time, so I finally chose to pick up the articles randomly and revisit later.

Prompt Engineering

Actual haiku quality is likely determined by a combination of the prompt and hyper parameters. This will be a never-ending cycle of feedback and improvement, as much is still unknown regarding LLM capacities, and prompt engineering is still closer to art than science.

Auth and manual generation

In order to avoid excessive generation requests by people who would program some scripts to overload the API, I decided to restrict haiku generation only to logged in users.

As the site is built with Next.js, one very convenient auth library is Clerk which offers a great integration and a generous free tier.

Challenges

Using LLM

It doesn’t seem that Google Gemini model was trained on a lot of data in Japanese, so the haiku quality is not great. One issue with LLMs is that we cannot know if the LLM doesn’t know the answer, or if the answer would be better with a different prompt. I am using a Few-Shot Prompting technique where the prompt contains several examples of inputs and their corresponding output, to “guide” the LLM into ideal answers.

I tried using the LLMs first to categorize and summarize news titles, but it ended up being very difficult to have a haiku matching the original topic. And it was taking too much time to generate. Generating the haiku directly from the article title makes sures that the topic is accurate, but haikus lack in emotion or originality.

Next.js 14

This is my first project with Next.js 14 and the app router. There was then some learning curve required to figure out how to use Server Components and Client Components, how to force re-render of Server Components when the database was mutated, etc…

The good thing is that now I have plenty of topics to write for further blog articles!

Having everything under a free tier

This being just a side project, I don’t want to have to spend a lot of funds to run it, so I try to keep it under what companies are providing with free tiers.

This has a direct impact on the number of haikus I can generate in a day or the quality of haikus.

Vercel’s hobby plan includes two cron tasks, and each task cannot take more than 10 seconds. It is difficult to fit the download of news articles, haiku generation and corresponding database save under this 10 seconds mark.

Next steps

- Enabling users to see all the generated haikus and rank them

- Looking at the highest ranked haikus

- Statistics of which hyper parameters generated the best haikus, etc…

- Having other sources of haikus than news articles titles

- Better prompt engineering to improve haiku quality